- April 25, 2023

Why Enclaves Exist

In this post, we give a simple model for understanding why trusted execution environments (enclaves) exist.

Summary

- The future of computers will be defined by non-von Neumann architectures. However, von Neumann architecture remains important for understanding the main components of computers: processor, memory, I/O.

- As computer form factors decrease in size (Bell’s Law), they become resource-constrained (limited processing and memory capacity). Therefore, resource-intensive workloads are computed remotely — hence virtualized cloud computing.

- Confidential Computing is essentially about the isolation and attestation of the main components (as defined by von Neumann architecture) of a remote (virtualized) computer — so that the remote developer and remote user have verification of the integrity and confidentiality of code and data being stored and processed in remote memory and processors.

- Open computer architectures based on the RISC-V instruction set architecture will lead to a standard hardware (and low-level software) TEE base implementation, meaning that developers can target a standard TEE architecture — as opposed to the disparate standards and implementations of proprietary ones. Processors, memory, and I/O will be isolated and attested in basically the same way. This applies to both local and remote TEEs — and regardless of the form of the computer (Bell’s Law).

- All remote (and, perhaps, local) computation should be trusted computation (isolated and attested). Evervault Cages enable all computations to be trusted computations by providing a simple interface for developers to deploy and run applications in TEEs — and to package containers that are portable from TEE environment to TEE environment (starting with AWS Nitro Enclaves).

von Neumann Architecture

The future of Moore’s Law (which (broadly) postulates that computational power doubles for the same price approximately every 1.5 to 2 years) will be achieved with non-von Neumann (“non-von”) computers given the inherently sequential (digital) nature of von Neumann computers; the von Neumann bottleneck, and Heisenberg Uncertainty in hardware miniaturization.

Non-von computers adopt parallel (analog), distributed and/or processing-in-memory architectures. Examples include DNA computers, optical computers, and quantum computers.

It’s clear we’re not in von Neumann’s universe anymore (at least with regards to computer architecture). However, von Neumann architecture lets us understand the fundamental components of computers — which will be important to understanding TEEs.

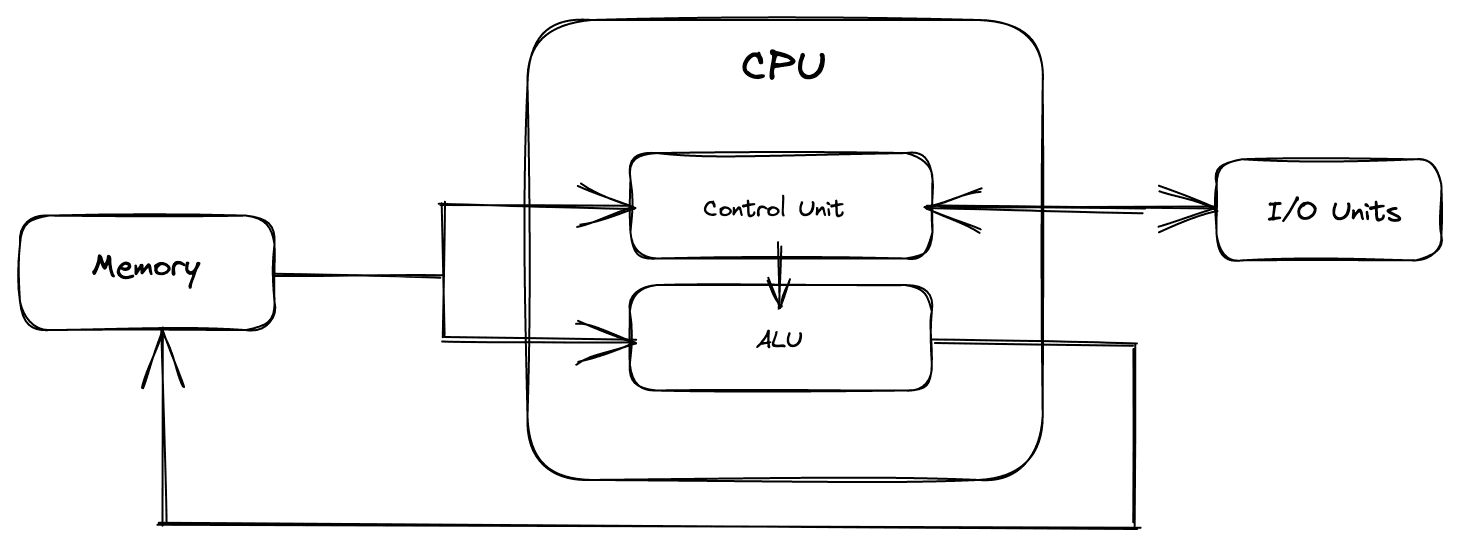

Fig. 1: von Neumann computer architecture.

Figure 1 presents the basic architecture of a von Neumann computer. There are three main components: CPU (processor), memory, and I/O.

Note: special-purpose processors (GPUs and custom ASICs) are often orders of magnitude more efficient than a CPU for parallel workloads such as graphics and machine learning. Open architecture standards (like RISC-V) will enable a greater rate of improvement of application-specific computers and, as we will see, application-specific TEEs.

The CPU reads instructions (code) and data from the main memory and coordinates the complete execution of each instruction.

The ALU combines and transforms data using arithmetic operations. The control unit interprets the instructions fetched from the memory and coordinates the operation of the entire system. It determines the order in which instructions are executed and provides all of the electrical signals necessary to control the operation of the ALU and the interfaces to the other system components.

The memory is a collection of storage cells, each of which can be in one of two different states enabling the storage of data and code as binary digits. There are many forms of memory: sequential, random, content-addressable, etc.

The input/output (I/O) devices interface the computer system with the outside world. I/O allows code and data to be entered into the system and provides a means for the system to control some type of output device.

The two key concepts of the von Neumann architecture:

- Data and instructions are stored in the memory system in exactly the same way.

- The order in which a program executes its instructions (code) is sequential (and memory is sequentially addressed and is one-dimensional).

This is sufficient information on von Neumann architecture for this post. For more detail, we recommend (Neu45) and (Eig98).

Bell’s Law

According to Bell’s Law, a new class of computers with a new (smaller) form factor is developed every decade: mainframes in the 50s, minicomputers in the 60s, workstations in the 70s, personal (desktop) computers in the 80s, laptops in the 90s, smartphones in the 00s, (millimeter-scale) IoT sensors and embedded devices in the 10s.

Bell’s Law is a useful model for understanding how computer networking evolves. More specifically, Bell’s Law is a useful model for understanding the emergence of (remote) cloud computing.

Simply: as the size of computers decreases they become resource-constrained, so more resource-intensive computations need to be performed remotely. To date, (virtualized) cloud computing has provided the predominant computing paradigm for remote computation.

To understand the cloud computing paradigm in more detail, we can briefly consider it from a technical perspective and an economic perspective.

From a technical perspective, cloud computing essentially concentrates (remote) computations into a high-performance computing server cluster with a virtualized data center.

Note: Virtualization is the identical software simulation of a physical computer. The simulation is called a virtual machine and the simulator software is called a virtual machine monitor (VMM) or hypervisor. For more on virtualization, see Disco (which became VMWare)(Bug97) and Xen (which enabled AWS to launch EC2 in 2006 before they created their own hypervisor for the Nitro system and Nitro Enclaves)(Bar03)). (For more insight into distributed cloud computing, we recommend (Kil20).)

Cloud computing uses virtualization to provide the sharing of physical machines (servers) between users. Virtual machine instances normally share physical CPUs and I/O interfaces with other instances (which is why trusted execution environments are important — to isolate memory).

From an economic perspective, cloud computing essentially transforms the fixed cost of owning physical computers (hardware) into a variable cost for users (developers)(Bez11). Developers can focus on building applications rather than data centers — and cloud service providers can focus on operating global, distributed data centers providing lower-latency services for end-users that developers would not be able to offer with their own servers.

(Bez11) > All AWS services are pay-as-you-go and radically transform capital [fixed] expense into a variable cost. AWS is self-service: you don’t need to negotiate a contract or engage with a salesperson – you can just read the online documentation and get started. AWS services are elastic – they easily scale up and easily scale down.

A second point to note on the economic perspective: the origin of cloud computing was an attempt to price the internet (computation) via dynamic priority pricing-based network management (Che02).

As defects and inefficiencies are removed from the high-performance cluster (technical), costs and pricing models (economical) are improved — and vice versa. (Both of these factors improve the remote resource-intensive computations for users with resource-constrained computers.)

Note: Computer classes continue to improve (owing to Moore’s Law (broadly defined)). Therefore, as the cost of desktop computers with greater memory and processing capacity decreases, we may (in some applications) return to resource-intensive computing being performed on local computers — rather than on remote virtual machines. That is to say, cloud computing may not be the best way to “price the internet”.

Confidential Computing

The cloud computing (and networking) paradigm is the reason why confidential computing (CC) and cloud TEEs have emerged.

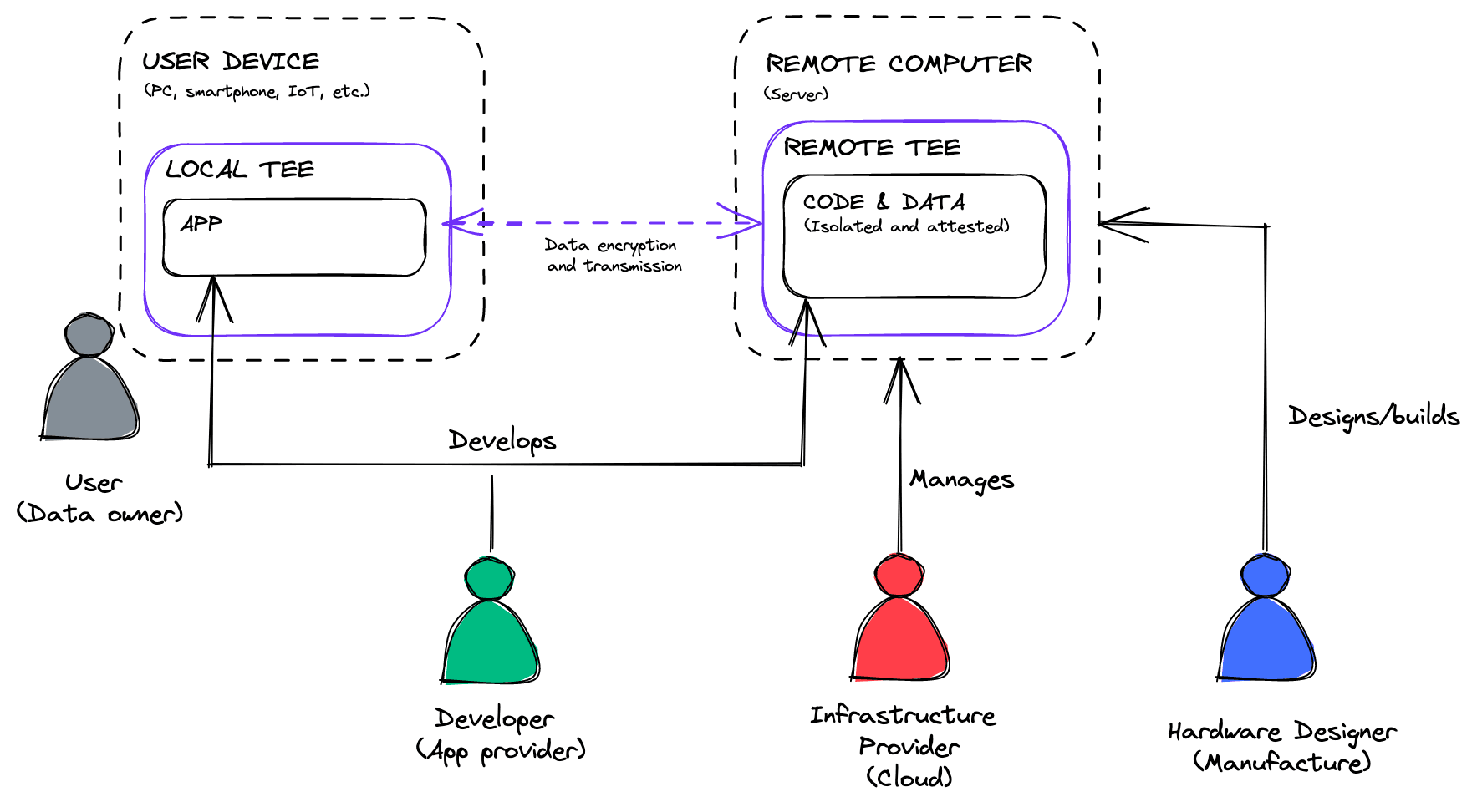

In simple terms, CC is the protection of code and data that is being run and processed on a remote (virtualized) computer. Because most (resource-intensive) computations of code and data are performed on remote computers owned and manufactured by (un)trusted parties (see Fig. 2), developers need a way to guarantee the integrity of the hardware and software of the remote computer — and the integrity/confidentiality of their code and data.

Note: We will not discuss the confidential computing space in great detail here. We provide brief comments under the (Sar21) reference (which is useful for a more detailed taxonomy of CC).

Fig. 2: Secure and trusted remote computation. The diagram includes all the parties involved in the cloud computing paradigm. The diagram includes “local TEEs” and “remote TEEs” because most computers will (do) have some form of secure enclave. (TEEs have their origin in mobile devices (See (Ekb14))). This is one reason for our closing comment that all computations should (will) be trusted computations (isolated and attested). (Note: not all computations will be performed inside the TEE.)

CC requires confidential memory, confidential processing, and confidential I/O (all of the main elements of a computer as defined by von Neumann). While TEE designs vary to a large degree, these are all achieved via some form of hardware-based (and software-based) isolation and attestation.

(Lee22) > TEEs are isolation technology that uses various hardware mechanisms, usually combined with low-level trusted software, to evict unnecessary code from the trusted computing base (TCB). In a nutshell, TEEs aim to protect code integrity, data integrity, and data confidentiality from various software adversaries, including a compromised OS. In general, TEEs provide a program [and data] with an exclusive memory region where the program can reside [either with or without persistence]. TEEs use hardware mechanisms to protect the memory so that even privileged software cannot arbitrarily read from or write to the memory … Additionally, most TEEs offer remote attestation, which allows the user of the TEE to cryptographically verify that the program has been initialized and isolated. Remote attestation allows the user to securely launch remote programs and provision secrets without trusting privileged software.

Open architectures

As noted above, the designs of TEEs vary to a large degree. This is because the design (and manufacture) of (all classes of) computers (processors) has been largely proprietary —and instruction set architectures (the lowest abstraction layer between hardware and software which informs computer design) standards have been proprietary. For example, x86 (Intel, AMD), ARM and Power (IBM). (Intel, AMD, ARM and IBM would be considered the “hardware designer” in Fig. 2).

This has resulted in TEE architecture being proprietary: SGX and TDX from Intel, SEV-ES-SNP from AMD, Trustzone and CCA from ARM, and PEF from IBM. These generally only work for specific underlying architectures and have disparate isolation and attestation standards and implementations.

RISC-V is changing both of these things. RISC-V is an open instruction set architecture (i.e. an open standard for implementing processor architecture).

(Hen19) > Inspired by the success of open source software, [there is an] opportunity in computer architectures: open ISAs. To create a “Linux for processors” the field needs industry-standard open ISAs so the community can create open source cores, in addition to individual companies owning proprietary ones. If many organizations design processors using the same ISA, the greater competition may drive even quicker innovation. The goal is to provide processors for chips that cost from a few cents to $100.

(Wat17) > Our position is that the ISA is perhaps the most important interface in a computing system, and there is no reason that such an important interface should be proprietary. The dominant commercial ISAs are based on instruction set concepts that were already well known over 30 years ago. Software developers should be able to target an open standard hardware target, and commercial processor designers should compete on implementation quality.

RISC-V has a working group for creating an open standard for TEE architectures — so that TEEs work across architectures and have the same fundamental isolation and attestation bases.

(Sah23) > Confidential computing requires the use of a [hardware]-attested Trusted Execution Environments for data-in-use protection. The RISC-V architecture presents a strong foundation for meeting the requirements for Confidential Computing and other security paradigms in a clean slate manner.

(Sah22) > The [RISC-V TEE] interface specification enables application workloads that require confidentiality to reduce the Trusted Computing Base (TCB) [the hardware, software and firmware elements that are trusted by a relying party to protect the confidentiality and integrity of the relying parties' workload data] to a minimal TCB, specifically, keeping the host OS/VMM and other software outside the TCB.

In simple terms: open computer architectures based on the RISC-V instruction set architecture will lead to a standard hardware (and low-level software) TEE base implementation, meaning that developers can target a standard TEE architecture — as opposed to the disparate standards and implementations of proprietary ones. Processors, memory, and I/O will be isolated and attested in basically the same way. This applies to both local and remote TEEs — and regardless of the form of the computer (Bell’s Law).

The future of computers will be defined by customized and composite processors (designed based on open standards) — and customized and composite TEEs (designed based on open standards). In the same way fully custom ASICs are used for specific computations to improve performance, we may see application-specific TEEs emerge to improve security in specific applications. (We can understand AWS Nitro Enclaves as application-specific TEEs given that they are based on the customized Nitro System (hardware and hypervisor)).

(Lee20) > Customizable TEEs are an abstraction that allow entities … to configure and deploy various TEE designs from the same [trusted computing base]. Customizable TEEs promise independent exploration of gaps/trade-offs in existing designs, quick prototyping of new feature requirements, a shorter turn-around time for fixes, adaptation to threat models, and usage-specific deployment.

(Sch21) > The ever-rising computation demand is forcing the move from the CPU to heterogeneous specialized hardware [GPUs], which is readily available across modern data-centers through disaggregated infrastructure. On the other hand, trusted execution environments (TEEs), one of the most promising recent developments in hardware security, can only protect code confined in the CPU, limiting TEEs’ potential and applicability to a handful of applications. We … propose composite enclaves with a configurable hardware and software TCB, allowing enclaves access to multiple computing and IO resources.

(As an aside, as open ISAs like RISC-V are adopted, perhaps there will be an even greater demand for the (limited) capacity of fabricators like TSMC (the leading processor fabricator) and their suppliers like ASML (which builds 100% of the worlds ultraviolet lithography machines, without which it is impossible to manufacture leading processors)).

Evervault Cages

In short, TEEs will be (and are) deployed in all forms of computers — from cloud servers and desktops to smartphones and low-energy IoT sensors and embedded devices (Bell’s Law).

It is clear that all remote (and, perhaps, local) computation should be trusted computation (isolated and attested).

In the same way Docker (which is a form of virtualization) made it easy to package applications into containers that are portable from environment to environment, Evervault Cages enable all computations to be trusted computations by providing a simple interface for developers to deploy and run applications in TEEs — and to package containers that are portable from TEE environment to TEE environment (starting with AWS Nitro Enclaves).

Want to try a Secure Enclave?

Get up and running with Evervault Cages — the easiest way to build, deploy and scale Secure Enclaves — in 15 minutes.

Learn More(Win22) > While Nitro enclaves are still young and have received nowhere near the same scrutiny as SGX and friends, we believe that their dedicated hardware resources provide stronger protection from side channel attacks than enclaves that are based on shared CPU resources.

References

(Neu45) von Neumann, J., 1945. First Draft of a Report on the EDVAC. Exact copy of the original typescript draft.

(Bug97) Bugnion, E., Devine, S., Govil, K. and Rosenblum, M., 1997. Disco: Running commodity operating systems on scalable multiprocessors. [Open Access]

(Eig98) Eigenmann, R. and Lilja, D.J., 1998. Von Neumann computers.

(Che02) Chellappa, R.K. and Gupta, A., 2002. Managing computing resources in active intranets.

(Bar03) Barham, P., Dragovic, B., Fraser, K., Hand, S., Harris, T., Ho, A., Neugebauer, R., Pratt, I. and Warfield, A., 2003. Xen and the art of virtualization.

(Bez11) Bezos, J., 2012. Amazon.com 2011 Annual Letter to Shareholders.

(Ekb14) Ekberg, J.E., Kostiainen, K. and Asokan, N., 2014. The untapped potential of trusted execution environments on mobile devices. [Open Access]

(Cos16) Costan, V. and Devadas, S., 2016. Intel SGX explained. Cryptology ePrint Archive 2016/086.

Useful summary of IntelSGX — the TEE that has had the most security attack attention from academia. Gives a useful overview of secure and trusted computation.

(Wat17) Waterman, A., Lee, Y., Patterson, D.A. and Asanovic, K., 2011. The RISC-V Instruction Set Manual Volume I: User-Level ISA.

For more insight into instruction set architectures, we recommend Computer Organization and Design RISC-V Edition.

(Hen19) Hennessy, John L., and Patterson, David A., 2019. A new golden age for computer architecture.

Honestly, just a fun watch/read.

(Lee20) Lee, D., Kohlbrenner, D., Shinde, S., Asanović, K. and Song, D., 2020, April. Keystone: An open framework for architecting trusted execution environments. [Open Access]

Proposes Keystone, the first open-source framework for building customized TEEs based on RISC-V.

(Kil20) Killalea, T., 2020. A Second Conversation with Werner Vogels. [Open Access]

(Sar21) Sardar, M.U. and Fetzer, C., 2021. Confidential Computing and Related Technologies: A Critical Review.

Provides a taxonomy of confidential computing — including multi-party computation and homomorphic encryption.

Note: With respect to the main components of von Neumann architecture, the difference between HE/MPC, on the one hand, and TEEs on the other hand:

HE and MPC protect data with encryption of inputs and outputs (I/O) so that, in the case of HE, data remains encrypted at all times (including while being processed).

TEEs protect data and code with hardware-based (or software-based) isolation (and attestation) of the CPU and memory components of the physical von Neumann computer.

Isolation and attestation of code is still needed for HE and MPC, so all three are complementary.

(Sch21) Schneider, M., Dhar, A., Puddu, I., Kostiainen, K. and Capkun, S., 2020. Composite Enclaves: Towards Disaggregated Trusted Execution. arXiv preprint arXiv:2010.10416.

Proposes composite TEEs — enabling GPUs to be used for trusted execution.

(Lee22) Lee, D., 2022. Building Trusted Execution Environments. EECS Department, University of California, Berkeley. Technical Report No. UCB/EECS-2022-96.

This is the PhD thesis related to Keystone (Lee20). Gives a brief history of trusted execution environments — starting with mobile TEEs to protect network operators. (See (Ekb14) for more detail on mobile TEEs.)

(Win22) Winter, P., Giles, R., Davidson, A. and Pestana, G., 2022. A Framework for Building Secure, Scalable, Networked Enclaves. arXiv preprint arXiv:2206.04123.

Gives a useful overview of AWS Nitro Enclaves.

(Sah23) Sahita, R., et al., 2023. CoVE: Towards Confidential Computing on RISC-V Platforms arXiv preprint arXiv:2304.06167.

Gives an insight into RISC-V TEEs. Includes a diagram of the RISC-V TEE reference architecture.