Beyond payment tokenization: Why developers are choosing Evervault's encryption-first approach

How Evervault’s dual-custody encryption model eliminates the fundamental limitations of traditional tokenization for PCI compliance

Over the last five years, confidential computing has dazzled the security world. Often branded as secure enclaves or TEEs, these hardware-secure compute environments protect sensitive data by providing a mechanism to verify that real-time processing can be trusted.

Confidential computing involves two complex systems. First, it requires hardware-driven cryptographic attestation—a technique for verifying real-time legitimacy. Additionally, it utilizes hardware keys to decrypt sensitive data.

In this article, we have three goals. The first is to establish why confidential computing was invented; security in itself is nice, but it’s important to understand the exact breaches that were curbed by the advent of confidential computing. Second, we’ll explore how confidential computing actually works without oversimplifying it. Finally, we’ll illuminate the pitfalls and why those pitfalls aren’t enough to make a case against leveraging confidential computing.

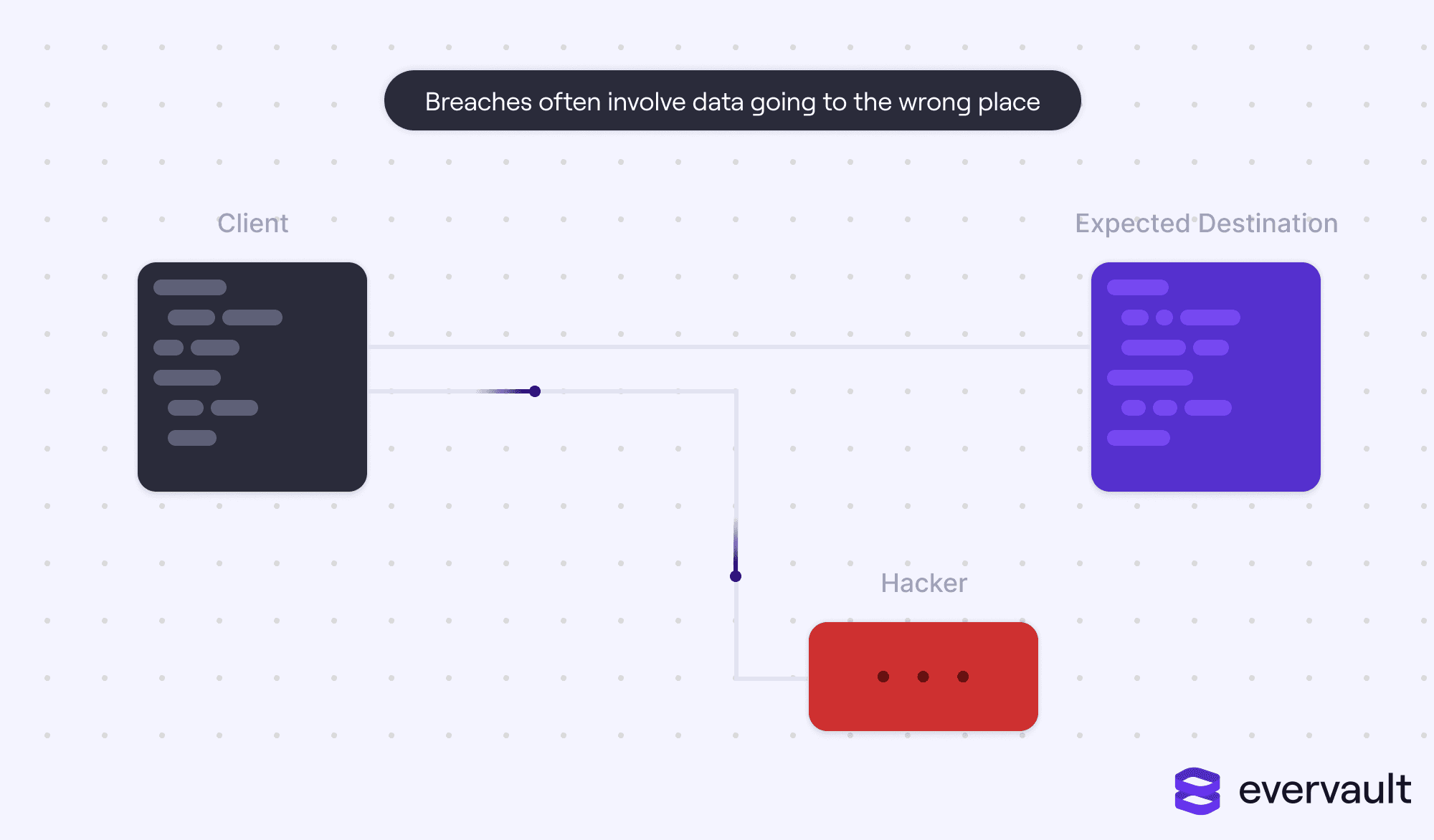

In cybersecurity diagrams, you’ll often see the word trust or trusted used to demarcate safe entities. Trusted sources. Trusted destinations. Trusted code. Trusted services.

This can be a little deceiving. In reality, nothing can be trusted; we only trust something relative to the other (and typically default) option. For example, a senior developer might be considered a trusted person, but hypothetically, their credentials could’ve been phished. They are only considered trusted within the scope of the existing security problem.

Often, security diagrams involving data breaches differentiate between trusted machines (company laptops and company servers) and bad agents (hacker-owned destinations).

But, taking a step back, we know that even company laptops are not 100% safe. There is always the risk that they’ve been infected by some malware. It’s the reason companies like Jamf and Zip exist, to ensure that proper protocols are followed to minimize an infection.

The same applies to company servers, which, today, are typically hosted in massive cloud solutions such as AWS, Azure, or GCP. Those solutions do offer anti-malware software like Trend Micro, but they also pose a separate risk. Unlike an employee laptop—which lives most of its life inaccessible in an employee’s apartment, work bag, or work desk—cloud servers are managed by the cloud companies, a foreign entity. And, just like any other organization, cloud companies can be breached. Additionally, developers don’t upload their application code using a USB thumb drive (though the thought of that is hilarious). They use third-party managed CI/CD workflows, and this dramatically increases the surface area of breaching a cloud machine.

To compound matters, cloud servers are routinely touching and processing sensitive production data. That’s actually what matters. A leak of sensitive data can lead to lost customers. At worst, it can even be company-ending.

So, the challenge is created. Recognizing the possibility of an attack on production cloud-managed machines, how can we ensure that the sensitive data isn’t compromised? Presently, there are two solutions: a wholly impractical one and a popular one. These are homomorphic encryption and TEE.

Homomorphic encryption is a technique that enables an untrusted machine to process data without decrypting it. It sounds a bit like magic, and unfortunately, it’s just as difficult.

To paint a picture, homomorphic encryption would be able to verify that a + b = c while keeping all three entities encrypted. To the machine, the computation that’s carried out is enc(a) + enc(b) === enc(c). It has evolved in the past decade, with the first advancement led by Craig Gentry via lattice-based encryption. The issue was that it was incredibly, incredibly slow, with the fastest trial carrying out a single operation in 30 seconds for impractically small lattices. More real-world examples took 15 to 30 minutes per operation.

Since Gentry’s first algorithm, several advancements have expedited homomorphic encryption, including utilizing eigenvectors for multiplication. But these techniques also create edge-case errors, making them unreliable. Their speed improvements are significant relative to Gentry’s first algorithm, but they still take a non-trivial amount of time for a single operation. Modern-day applications, meanwhile, involve thousands of compute operations per user-driven action.

Today, homomorphic encryption is plainly impractical. It’s unclear whether it’ll ever be practical. Machines can only become so much more efficient. And applications are demanding more and more computations.

This constrains the challenge—can we ensure decrypted data’s safety to a cloud-managed device? The solution is trusted execution environments, known as TEEs.

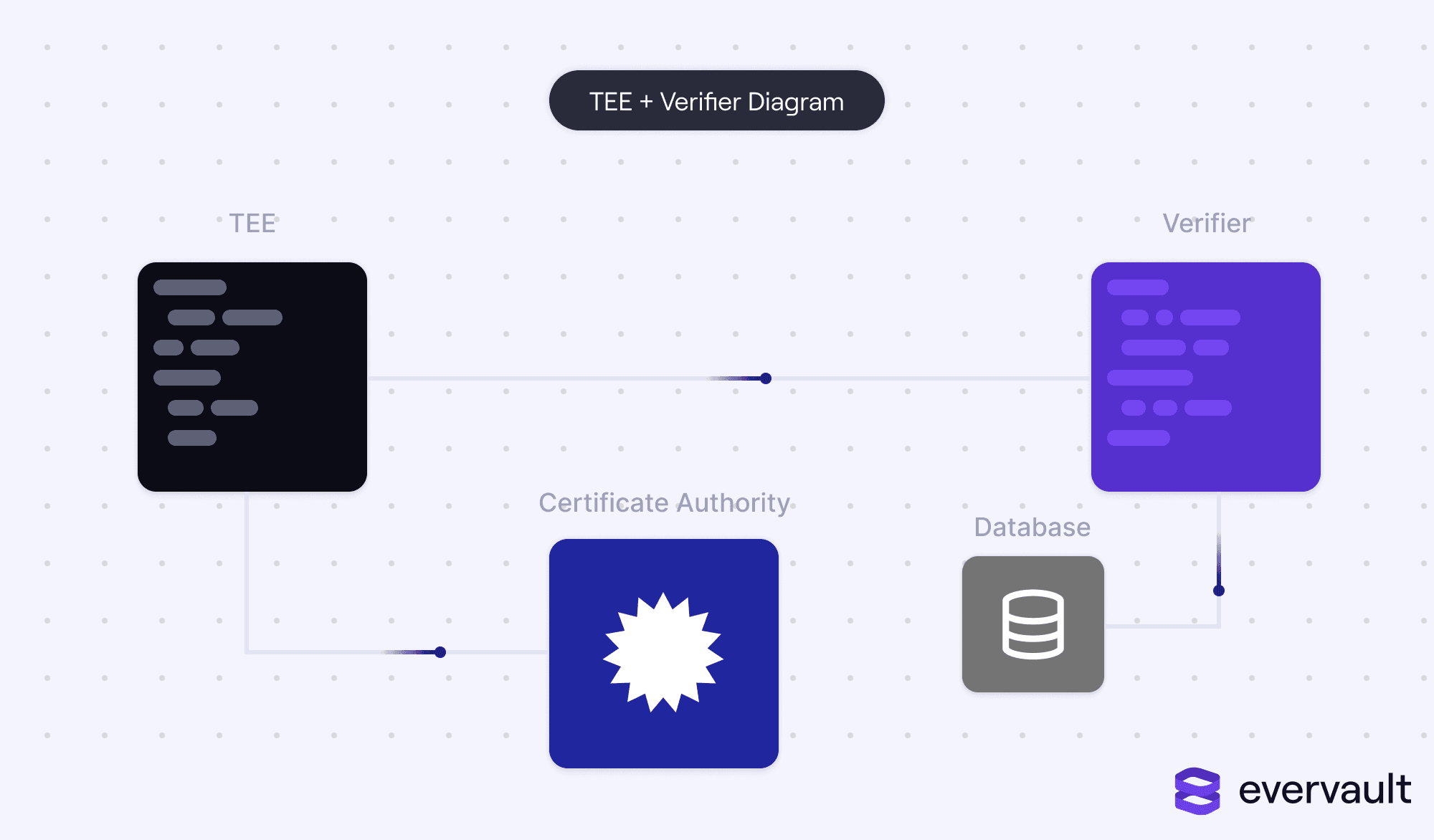

Trusted execution environments, often branded as secure enclaves, are enclaves running on specially designed chips to enable trustworthy computing. However, what’s often understated about TEEs is that they do rely on an external component—a verifier.

TEEs accomplish confidential computing via two discrete processes: (i) cryptographic attestation, which is a hardware-driven verification mechanism to prove a tamper-free environment and application; and (ii) stringent network isolation, to prevent outside access.

Let’s begin with what cryptographic attestation is not (but is often incorrectly explained as): a TEE guaranteeing that the application code is legitimate. That is a massive oversimplification. Rather, a TEE can credibly transmit hashes to an external verifier to ensure that its booted system and application haven’t been tampered with.

The TEE relies on a hardware component, the Trusted Platform Module (TPM) to accomplish this. A TPM has cryptographic primitives accessible to the TEE; specifically, it has an immutable endorsement key (EK), a template-based key originating from a hard-coded seed value (endorsement primary seed, or EPS).

A TEE’s external interface for requests is hard coded to:

A common misconception is that the TPM uses its EK to carry out this attestation. This is false. An EK cannot be used to sign requests, just encrypt and decrypt. It is just an RSA key pair with a private and public component. The private component is never released from the TPM. The EK is used to create an attestation key (AK) that’s used for attesting the TEE’s component. A request is then sent to a certificate authority (CA) with the AK public key and the EK public key. The CA returns a value encrypted with the EK public key to the TPM; the TPM proves itself by using the EK private key, which the CA either accepts or rejects. This is made possible by OIDs (Object Identifiers), which enable exterior parties to verify TPM-related keys and TPM actions via an issuing CA.

The question remains about what to do with the attested measurements. The most basic approach would be to set up a verifier that makes a request to a TEE, collects the measurements, and checks them against a trusted set of measurements stored in a database before dispatching sensitive data to the TEE.

In reality, TEEs typically integrate with a key-management service like AWS KMS, which only shares the keys to decode encrypted data if the TEE’s software components are proven legitimate.

Given that TEEs are typically encapsulated by a grander hypervisor process that manages them, there is a risk that the exterior will gain access to sensitive data stored in the TEE’s partition of memory.

To address this, the TEE utilizes hardware encryption to encrypt all data in memory, only decrypting it for processing. This safeguards data from being leaked in a real-time memory leak.

Enclaves have no interactive access or external networking. They can only connect to an instance owned by the same user via a local channel. This makes the environment only accessible via another instance, which dramatically reduces the attack surface.

AWS recently launched a similar product to TEEs called Secure Nitro Enclaves. Enclaves are similar to TEEs in that they have no persistent storage, no interactive access, and no external networking. They also use cryptographic attestation to provide security guarantees.

However, Nitro Enclaves rely on virtual machines and AWS Key Management Service (AWS KMS) to provide a similar set of features that is offered by TEEs via EKs, AKs, and CAs. Often, Nitro Enclaves are branded as cloud-based TEEs; it’s a fair take, as long as you recognize that Nitro Enclaves supplement some hardware guarantees with AWS KMS’s guarantees.

Remember, security is relative. While TEEs make it drastically harder for attackers to steal decrypted sensitive data, they can still be exploited through some creative albeit difficult attacks.

The first group of these attacks are hardware attacks, in which an attacker would need to gain access to the actual physical chip running the TEE. This is a common area of focus by researchers because a big goal of TEEs was clamping access of the cloud provider. For instance, it’s been proven that subjecting TEEs to complex voltage techniques can leak parameters of the TEE. These are known as side-channel attacks.

Additionally, TEEs require that the verifier logic isn’t impaired. Otherwise, a fake TEE can be trusted with sensitive data in a case where the verifier’s database was compromised with fake measurements or if the verifier was compromised.

Regardless, these pitfalls make accessing sensitive data far trickier for attackers. Instead of just installing malware on the processor that handles sensitive data, attackers need to target the mechanisms that verify the TEE’s legitimacy. So, businesses that do not use secure enclaves become more viable targets than organizations that’ve upgraded to trusting TEEs.

TEEs made confidential computing practically possible—a massive advancement in security. Given the growing concerns over sensitive data falling into nefarious hands, TEEs can help ensure that only trusted code and machines are granted access.

Of course, implementing TEEs can be difficult, as it not only requires special hardware but also correctly built verification logic. This is why we built a Secure Enclaves product at Evervault; we roll attestation into an SDK so that developers don’t need to hand-roll their own protocol. Notably, the enclave runs a sidecar process that automates attestation measurement generation so that the customer’s underlying process doesn’t need to be aware it’s inside an enclave.

Regardless if using a third-party tool like Evervault or implementing TEEs themselves, today’s development teams should embrace confidential computing to safeguard their customers.