- February 27, 2024

How we built Enclaves: Resolving clock drift in Nitro Enclaves

At Evervault, we have been running AWS Nitro Enclaves in production for almost three years. During this time, we have encountered some undocumented quirks, one of the most surprising being a noticeable clock drift in as little as a day from the enclave launch.

The first implementation of Nitro Enclaves at Evervault was our encryption engine, which performs non-time-sensitive tasks and is authenticated with internal services using mTLS with externally generated keys and certificates. The mTLS connection is also terminated on the host, meaning that the time used will be accurate due to the Amazon Time Sync. The app was also subject to regular deployments and security patches, and due to this, we did not observe clock drift before we began working on Enclaves.

Observed drift during the Beta testing period

In an early iteration of Enclaves (formally known as Cages), the data plane generated self-signed certificates with the enclave attestation document embedded in the SAN of the certificate. Attestation documents have a 3-hour validity period, so the certificate needed to be regenerated every three hours. As this happened within the enclave, the enclave system time was used when generating “not before” and “not after” values in the certificate.

When users started using enclaves during the beta testing period, they began to see intermittent “certificate not yet valid” errors in their clients. Another user saw JWT validations fail with “token issued in the future” with their internal authentication that used tokens with a 5-second validity.

We were surprised by this because we hadn't come across any documentation advising us to manually sync the time to prevent drift and had assumed that, as with other AWS services, it was managed automatically.

Investigation

A quick curl comparing system time from an AWS EC2 instance and an enclave showed that there was, in fact, a significant time difference in an enclave running for only two days.

1date && curl -I https://test-time-sync.app_00000000000.cages.evervault.com/health

2Thu 01 Dec 2023 10:54:17 GMT

3HTTP/1.1 200 OK

4date: Thu, 01 Dec 2023 10:54:15 GMT

5x-evervault-ctx: C7185F901AA5986E6BF2D5D1EBC36516This confirmed that the enclave clock was drifting by approximately a second per day. We run weekly kernel patches for security updates, so the maximum time an enclave could drift is ~7 seconds, but this is still significant and something we needed to rectify. This level of clock drift would have huge implications for apps that rely on time-sensitive operations.

As an intermediate measure, we created a cron that would restart enclaves periodically while we investigated the issue.

Resolving clock drift with host time

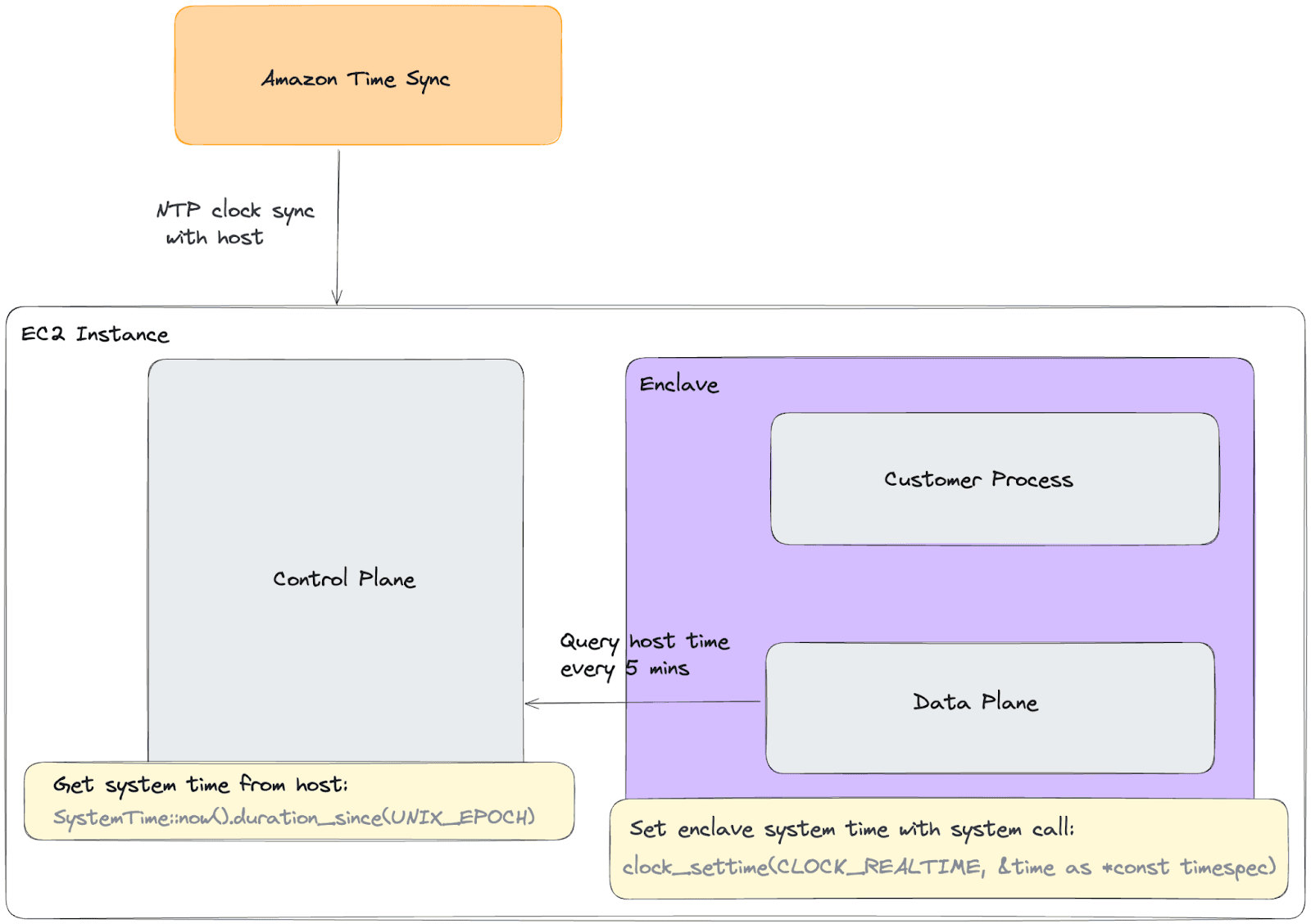

We contacted AWS support to learn more about what was causing the issue. The Nitro team informed us that the clock is never synchronised after start-up and that the amount of drift is proportional to the load on the enclave. Without intervention, a long-running enclave would continue to go out of sync with realtime indefinitely.

We discussed possible solutions with the Nitro Enclaves team to determine how to resolve the issue best. The team advised that we could sync the enclave time with the hypervisor clock via the /dev/ptp0 device, with the caveat that it is not as accurate as the time from Amazon Time Sync. Amazon Time Sync ensures that EC2 instances are kept in sync with millisecond accuracy (and, more recently, microsecond accuracy on supported instances).

We decided to test if we could successfully sync the enclave time with the host in a time range that would guarantee an acceptable level of accuracy. We were sure the host time was accurate; we needed to determine that the request from the enclave would be low enough latency to use the time without more complicated intervention.

In the data plane, we added a tokio task that starts polling every five minutes after startup to query the system time from the host. Using Rust’s libc crate, we used the clock_settime system call to override the kernel clock with the time from the host.

We logged the time for the round trip from the enclave to the host, which varies but takes approximately 700-900μs. That time includes the request before time is taken from the host, so we can very roughly approximate that the difference is ~500μs in difference. Our aim was to provide close to millisecond accuracy so we determined that this was sufficient for now. In the future, if we need microsecond accuracy, we will need to implement a more robust NTP algorithm.

Next Steps

The next step we will be taking will be to use Cloudflare NTS in order to sync time from a trusted remote NTP server with TLS. We will be using the same blind proxying from the enclave that we use for network egress.

Conclusion

After discovering that Nitro Enclave clock time is never synchronized post enclave initiation, we found that it could be remediated by periodically polling the EC2 host time, which is reliably synchronized by Amazon Time Sync. This reduced the clock drift from 7 seconds over a week to microseconds.