Beyond payment tokenization: Why developers are choosing Evervault's encryption-first approach

How Evervault’s dual-custody encryption model eliminates the fundamental limitations of traditional tokenization for PCI compliance

Attestation is the concept of verifying information about an application you are communicating with, such as what hardware it is running on or what security features it has. In this post, we'll be exploring attestation of the code deployed in an application. We'll show how it can provide a mechanism for secure communication and why it needs to be easier.

Code attestation is the concept of verifying what code has been deployed in an application you are communicating with. This is supported in Trusted Execution Environments (TEEs) via signed attestation documents (ADs). TEEs produce signed ADs that contain information about the application running in the TEE. The user can inspect the AD to ensure that the information is as expected.

There needs to be something in the AD that allows the user to attest what code has been deployed. The simplest approach is to include the full source code of the application in the AD, allowing the user to perform a 1-1 comparison with the expected code. However, this is impractical when there is a large amount of code in the application. The typical approach is to include a measurement of the code in the AD. This is usually a hash of the code. The user can then compare the measurements in the AD against the measurements of the expected code.

It is important to note that the user attests all of the code deployed in the TEE. If they haven't been careful, and have included a malicious dependency in their code, then their attestation could be successful, but the code they have deployed may be doing something they don't expect.

There is a tradeoff here between usability and security. If the code in your application is very small, with few dependencies, you will be more confident that you are attesting code that does what you expect it to do. However, less code generally means less functionality. Having a whole operating system in a TEE gives you a wide range of functionality, but is more difficult to audit. For this reason, when deploying containers in TEEs, it is recommended to use minimal, audited base images.

Public-key cryptography can be used to establish secure communication with a TEE across an arbitrary channel. This strongly depends on the TEEs support for code attestation, as the user needs to know that the private key only ever exists within the TEE. We'll demonstrate this by first showing a secure example, and then demonstrating how it breaks down without code attestation.

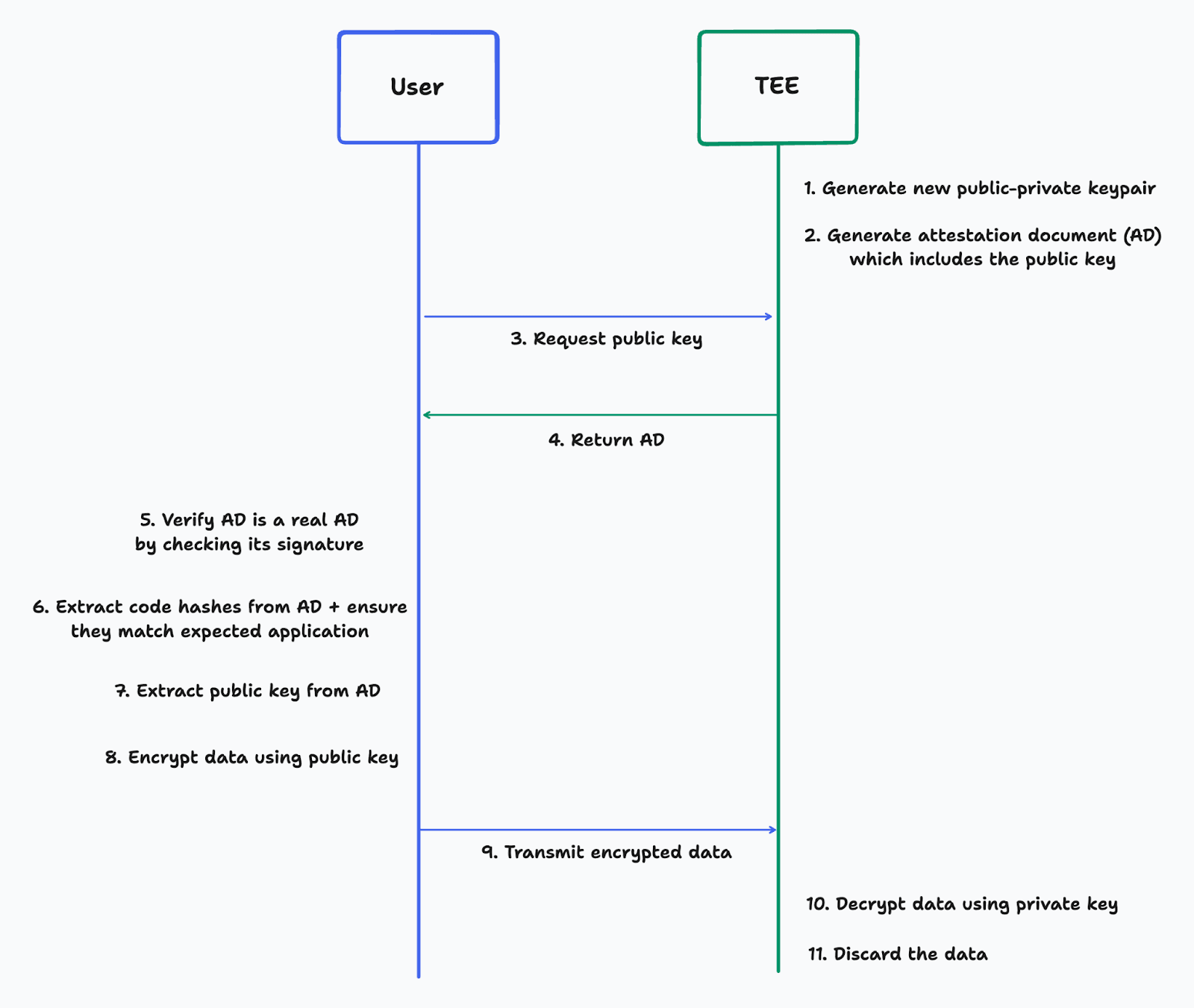

Here is a flow diagram of a user establishing secure communication with a TEE using public-key cryptography:

If the user can attest that the application in the TEE only has the functionality shown, the user knows that the data they encrypt using the public key can only ever be decrypted and accessed within the TEE. This attestation is done in Step 6. The user has a hash of the application they expect to communicate with, which is compared with the measurements in the AD before transmitting any private data.

This model can be extended into TLS, which is how Cages Attestation in TLS works.

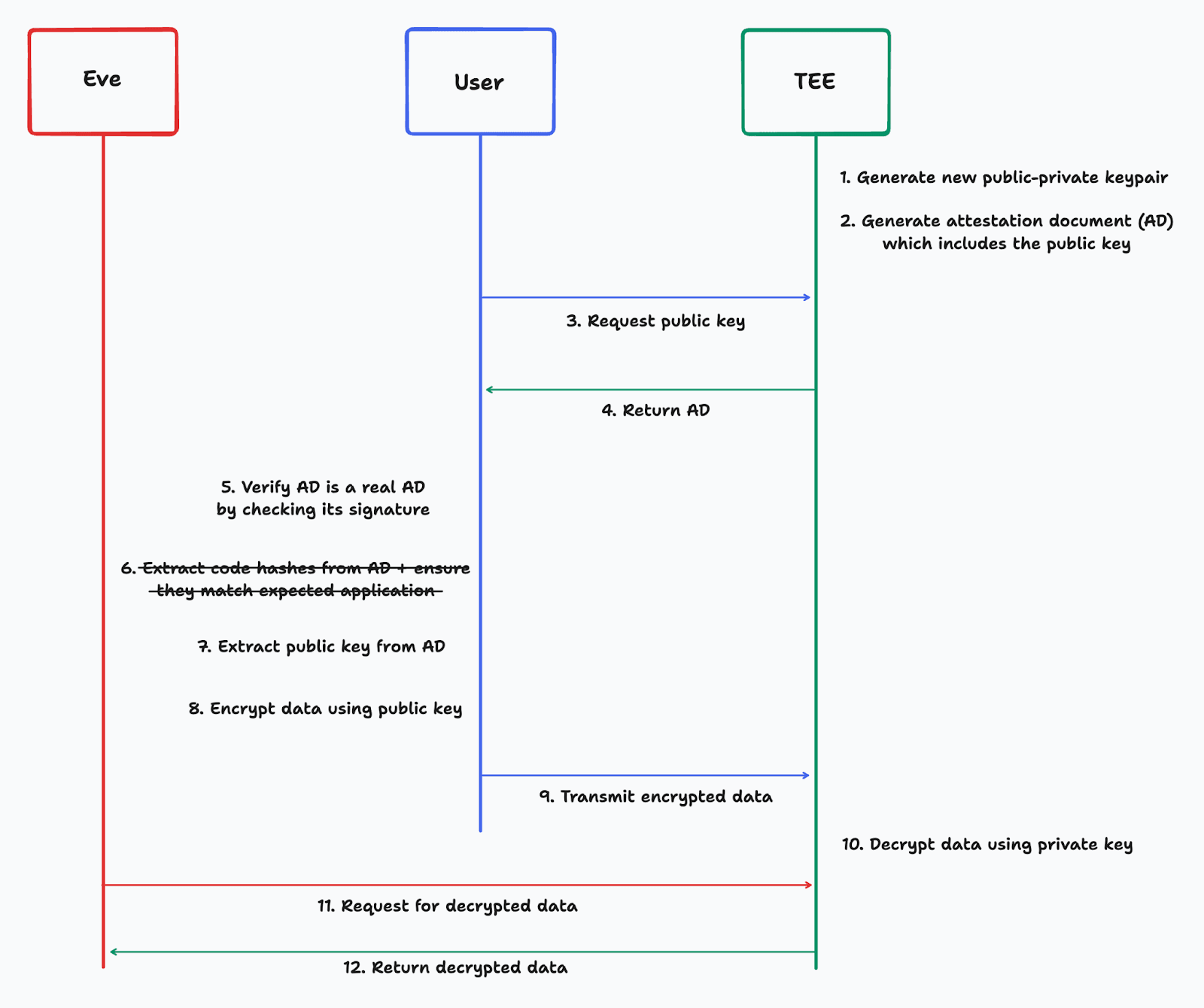

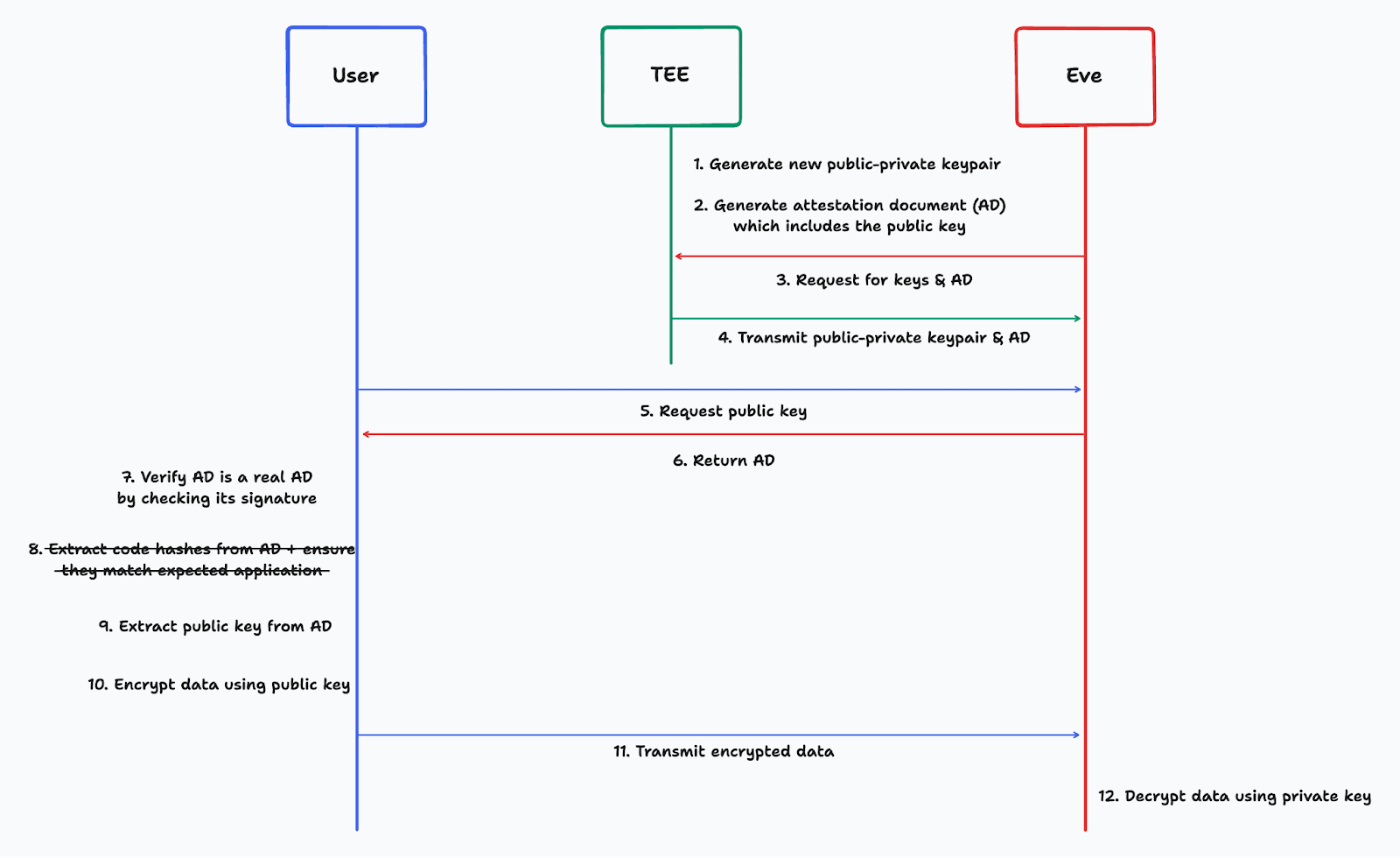

Now let's consider the case where the code attestation step is removed. This opens up several attacks. We'll show a couple of examples to demonstrate this.

In the first attack, the application includes functionality for saving the decrypted data and leaking it to another party, Eve. As the user doesn't attest the functionality of the application, they have no knowledge of this.

In the second attack, the application leaks the public-private keypair from the TEE to Eve, along with an attestation document. This allows Eve to spoof the user into thinking they are communicating with a TEE, while they are actually gifting their data to Eve, who is outside the TEE.

These attacks demonstrate the importance of code attestation and why we are cheerleading for it.

TEE providers usually allow you to sign an application using a private key before it is run in the TEE. The ADs produced by the TEE then include the signature of the running application, which allows you to check that it was one you deployed. If you are the only person interacting with the application, it might be fine to just check the signature, as you can trust that the applications you've signed weren't malicious.

However, this breaks down once you have other users interacting with the application. They shouldn't need to place all of their trust in your signature, which would include trusting that you're keeping your signing key in a secure location. They should be able to attest the code itself, so they know they're not in contact with a malicious application.

So far, we've argued the importance of code attestation; now, let's look at how well it is supported in the major TEE technologies.

Intel SGX ADs include a field called MRENCLAVE which is a "SHA-256 digest that identifies, cryptographically, the code, data, and stack placed inside the enclave…". Their docs don't make it particularly obvious how a user can compute this MRENCLAVE value, but thankfully it can be done using the `sgx_sign` tool.

Nitro Enclaves' ADs contain fields called Platform Configuration Registers, which are hashes of various parts of the filesystem in the Enclave. When a user is building an Enclave, the PCRs of the Enclave are displayed. The user can then compare these PCRs with those shown in an AD. I think this is the most user-friendly support for code attestation.

AMD SEV-SNP (SEV) is a technology that allows users to run VMs in a TEE. The documentation I've seen on attestation in SEV don't include much information about code attestation. For example, Microsoft Azure's guest attestation for confidential VMs docs show how to attest that the VM "runs on the correct hardware platform and/or secure boot setting" but don't explain how to perform code attestation. SEV's documentation mentions stuff like "guest measurements", so it seems possible, but I haven't been able to find any tutorials on how a user can compute these measurements.

TDX is another technology for running VMs in TEEs, and seems to be Intel's competitor to SEV. Another similarity to SEV is that their docs have mentions of VM measurements, but I haven't seen any example of how a user can compute these. It is still early days for TDX, so hopefully there are upcoming tutorials for this.

TEE technology potentially makes it possible for all client-server communication to achieve the security model described in this post. However, to achieve that, code attestation needs to be easier. Perhaps an expert in SEV or TDX will read this and state that I'm just not skilled enough – that I should be able to figure out how to do it. But to achieve widespread use of this security model, it should be possible for users who have even less knowledge of TEEs than I do to perform meaningful code attestation.

Engineers deploying an application into a TEE will likely have some domain knowledge about attestation (for now), but the application's end-user likely won't, especially if it is a public service. It shouldn't only be the deployer that knows how to attest the code. The end-user should also find it simple and understandable.

Considering Cages currently bridges lots of the usability gaps for Nitro Enclaves, perhaps we could explore more ways to make attestation accessible too.